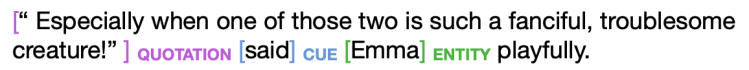

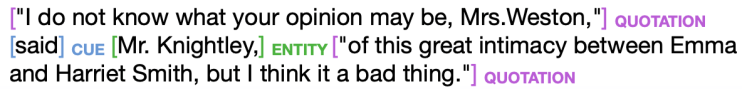

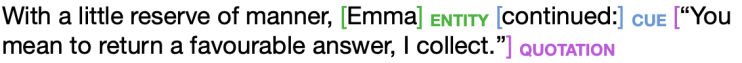

Quotation detection is an important processing step in a variety of applications in machine learning, including generative applications like audiobook or script generation and classification applications such as automated fact checking.

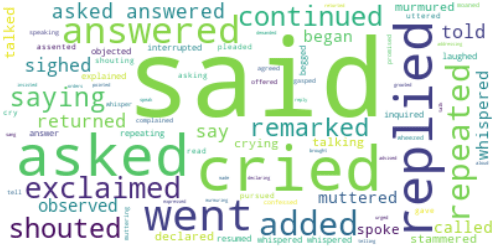

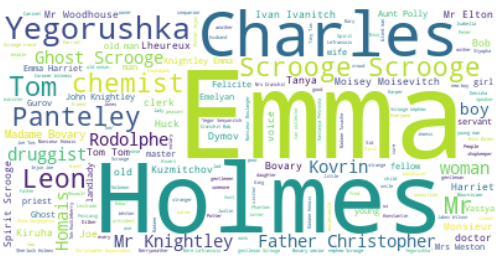

RiQuA, Rich Quotation Annotations is a corpus that provides quotations for English literary text. RiQuA dataset consists of 15 excerpts from 11 works of 19th century English novels by 6 authors. Some of the works include Jane Austen’s ”Emma”, Anton Chekhov’s ”The Lady with the Dog” and Mark Twain’s ”The Adventures of Tom Sawyer”.

Quotation detection can be challenging since most words are not significantly more likely to be inside or outside of a quotation, so a quotation detection model needs to have some understanding of the context surrounding words. In this project, we experiment with various models for quotation detection, focusing on methods that do not require excessive feature engineering. We evaluate a simple most common tag baseline, a number of supervised models trained on BERT embeddings, and a fine-tuned BERT model, and we find that BERT is able to embed the context around each token to significantly improve performance over the baseline.

We found that, as expected, the most common tag has some success in identifying cue words but fails spectacularly at identifying quotations, and the BERT-based models that are able to capture more context perform better. The fine-tuned model performs the best, with an F1 score of 0.75 both on quotations and overall, falling a bit short of the state-of-the-art of around 0.85. KNN and Naive Bayes both significantly outperformed the most common tag baseline but still fell far short of the finetuned model, achieving F1 scores of 0.20 and 0.23 on quotations in the test set, respectively. The Logistic Regression model, however, was able to approach the performance of the finetuned model, reaching 0.65 F1 on quotations in the test set.