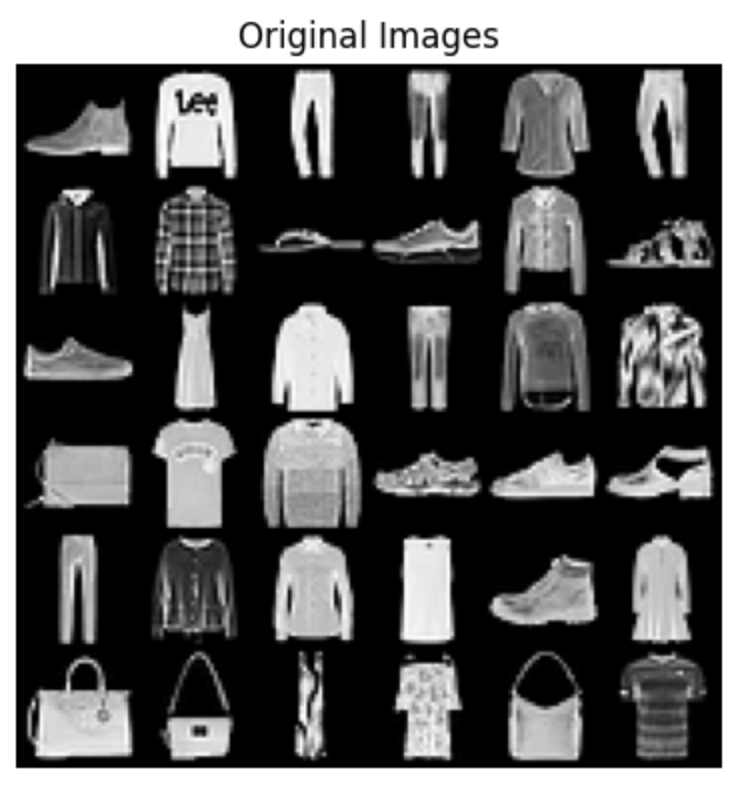

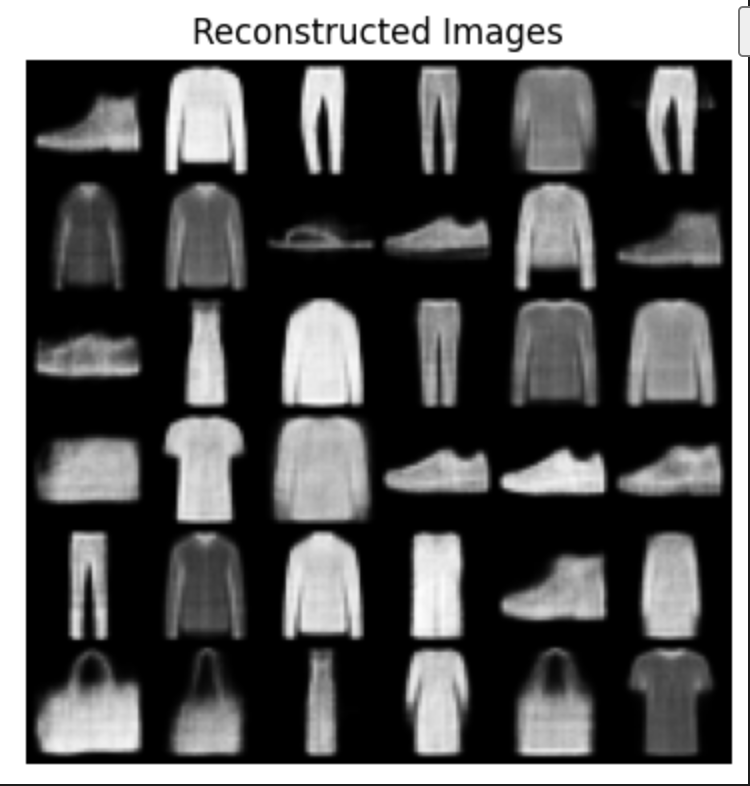

VAE:

Implemented a Variational Autoencoder (VAE) on the Fashion MNIST dataset involving training a neural network architecture to learn a probabilistic mapping of input images to a latent space.

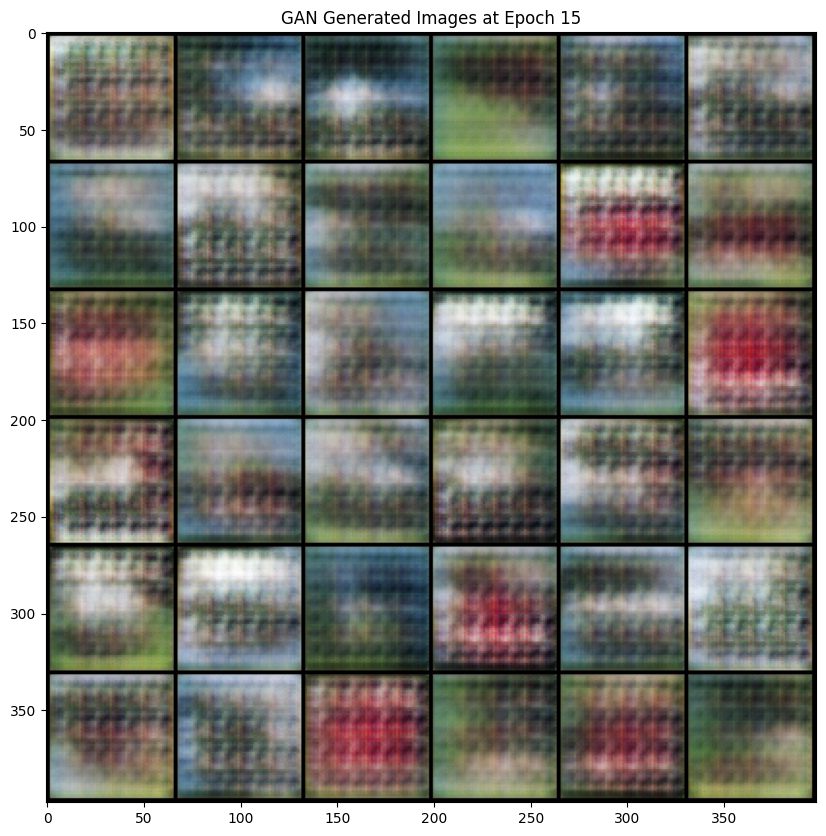

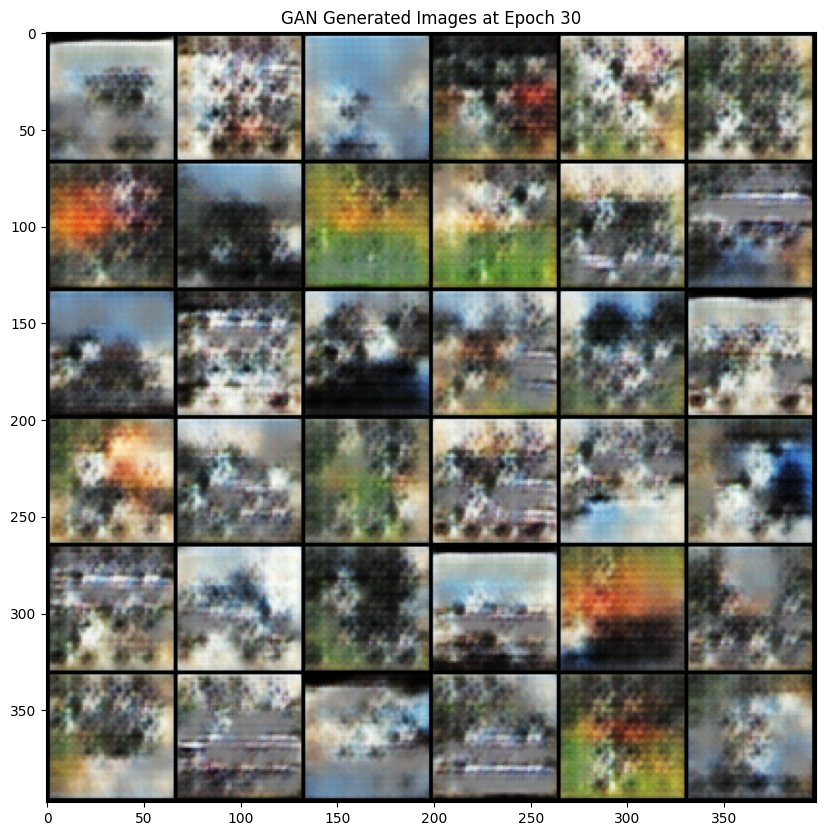

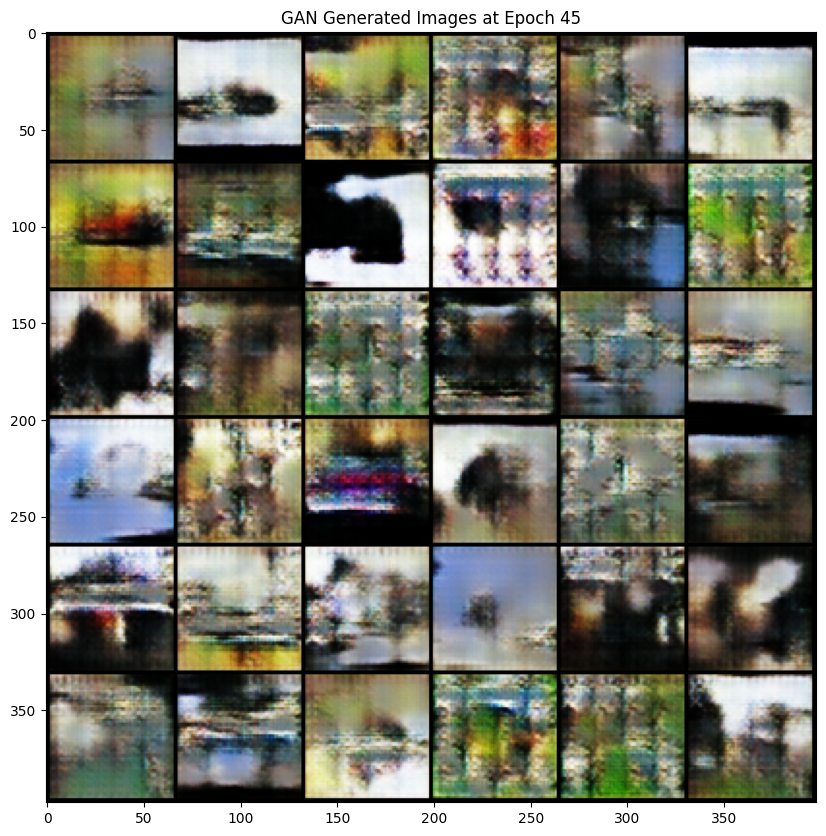

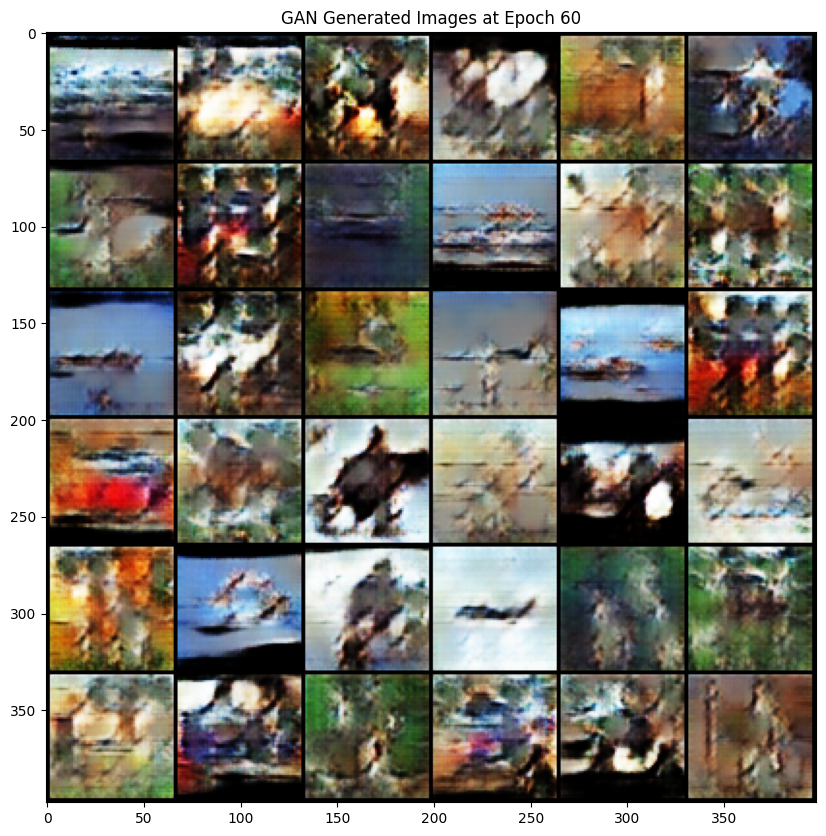

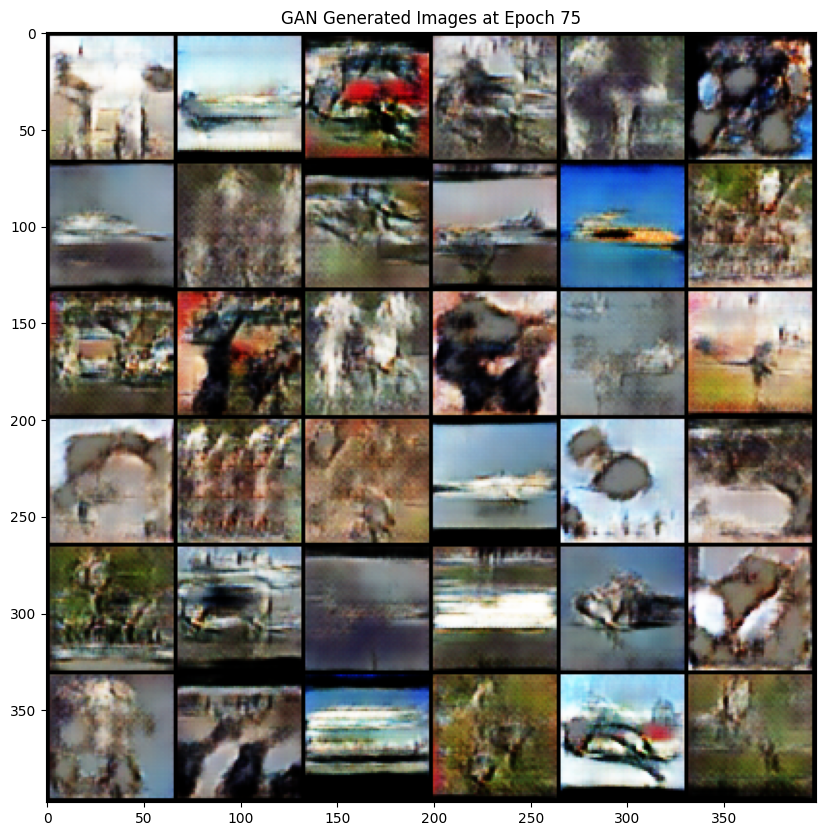

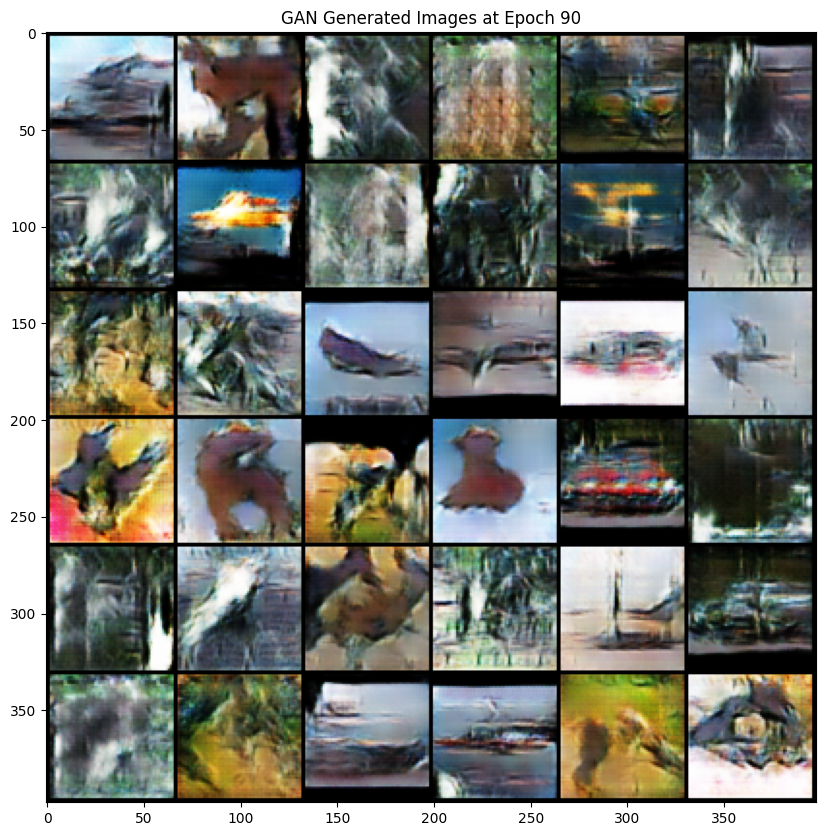

GAN:

Constructed from scratch following the research paper on GAN, on the STL-10 dataset. It is a powerful unsupervised learning model that facilitates domain adaptation by mapping images from one domain to another without paired training data, showcasing impressive results generating realistic images. The objective function is a min-max game, aiming to minimize the generator’s loss while maximizing the discriminator’s loss. Implemented tricks to address potential issues such as mode collapse, including normalization, Tanh activation in the generator’s last layer, Gaussian distribution for latent code, and modified loss function.

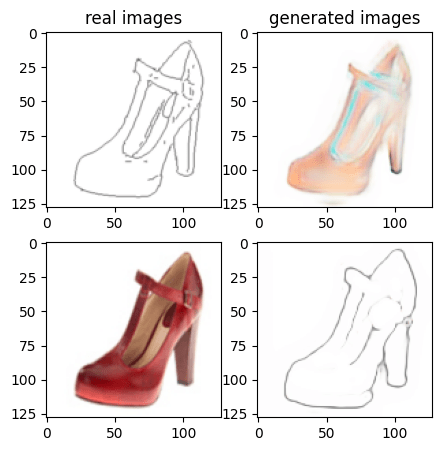

CycleGAN:

Implemented CycleGAN for image-to-image translation without paired training images. Used Edges2shoes dataset. Network architecture follows the research paper, featuring an encoder-decoder structure with convolution, instance normalization, and residual blocks. Reflection padding is applied to reduce artifacts. The objective includes adversarial loss for distinguishing generated images and cycle consistency losses to ensure mapping consistency. Training involves two generators (G: X → Y, F: Y → X) and discriminators (DY, DX). Code was developed from scratch, adhering to the specified architecture and methodology outlined in the paper.

Code: click here